Conversion Optimization with A/B Testing – What It Means & Why It Matters

Learn how Conversion Optimization with A/B Testing boosts engagement and sales with data-driven experiments, smart tools, and proven methods.

Conversion Optimization with A/B Testing is the process of improving a website, app, or funnel by using controlled experiments (A/B tests) to see which variation performs better. Rather than guessing what changes will help, this method uses data to inform decisions.

In this guide, you’ll learn:

- What is conversion optimization with A/B testing

- Key benefits and ROI

- Best practices and pitfalls to avoid

- Step-by-step process for running effective A/B tests

- Tools & platforms to help you

- How to interpret results correctly

- Real world examples

- Future trends

What Is Conversion Optimization with A/B Testing?

Definition of Conversion Optimization

Conversion optimization (or CRO, Conversion Rate Optimization) is the systematic process of increasing the percentage of users who take a desired action — making a purchase, signing up, downloading, etc. It’s not one-time work but an ongoing process of measuring, testing, learning, and improving.

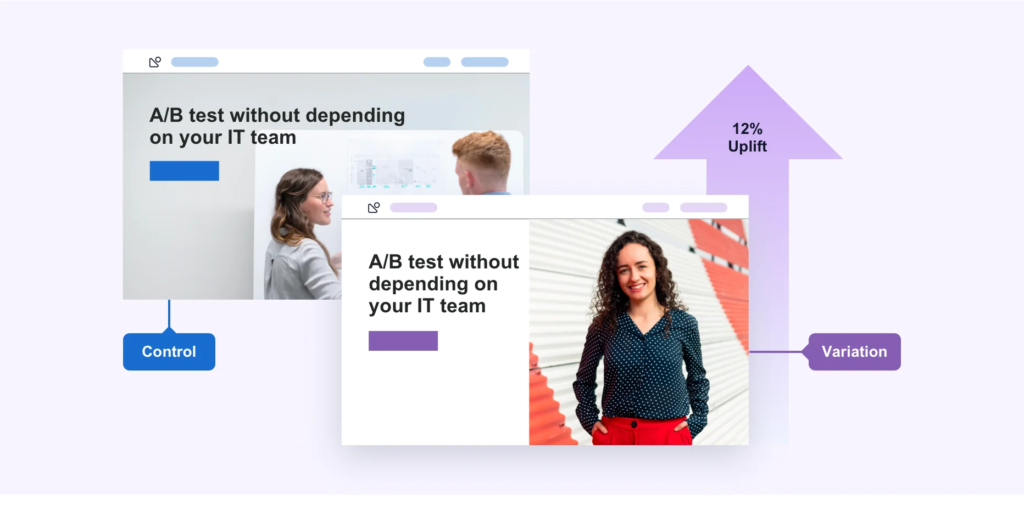

Definition of A/B Testing in the Context

A/B testing is a method where you present two (or more) versions of a web page, email, or element — Version A (control) and Version B (variation) — to similar audiences. You compare their performance on one or more metrics (conversion rate, click-through, time on page, etc.) to see which is better.

When you combine these, conversion optimization with A/B testing becomes a powerful tool to make incremental improvements that are measurable.

Why Conversion Optimization with A/B Testing Is Important

Data-Driven Decisions Reduce Risk

Rather than relying on intuition or guesswork, you’re using actual user behaviour to guide what changes to make. This lowers the risk of changes that might harm conversion.

Improves ROI & Revenue

Small improvements in conversion rate often lead to big gains. Better conversion means more of your existing traffic converts, which is more cost-effective than always acquiring more traffic.

Enhances User Experience

By testing variations, you also learn what users prefer — button placements, colors, wordings, page layouts. Better UX often correlates with higher trust and engagement.

Continuous Improvement & Competitive Edge

Because conversion optimization with A/B testing is iterative, you keep improving over time. Companies who test continuously tend to stay ahead of competitors.

Helps Prioritize Changes

With test results, you know what changes matter most. You can invest resources where the impact is highest (e.g. CTA button, headline, form fields) rather than making random tweaks.

Key Elements of Effective Conversion Optimization with A/B Testing

Clear Goals & Hypotheses

To start, define what conversion means for your case (signup, sale, download). Then form a hypothesis: e.g. “Changing the CTA text from ‘Register’ to ‘Get Started’ will increase sign-ups by 10%.”

Identify Metrics & KPIs

Pick your primary metric (conversion rate), and supporting metrics (bounce rate, time on page, exit rate). Also define what level of improvement is meaningful.

Understand Your Audience & Segments

Different audience segments may respond differently. For example, new visitors vs returning visitors, mobile vs desktop, geographical differences. Conversion optimization with A/B testing should account for segmentation.

Isolate Variables – Test One Thing at a Time

To attribute change properly, test one element at a time (headline, button color, layout, etc.). If you test many changes at once, you may not know which change caused the effect. goodriot.co+2Artisan Strategies+2

Sample Size & Test Duration

Ensure your test has enough traffic (visitors) and runs long enough to reach statistical significance. Too small a sample or too short a duration risks misleading results. hub.conversionmentors.com+2Artisan Strategies+2

Randomization & Traffic Split

Divide traffic randomly and fairly between your variants. This helps ensure external factors or biases don’t skew results. air360.io+1

Statistical Significance & Confidence

You need to choose appropriate confidence levels (often 95%) and use statistical tests to confirm that differences are not by chance. Avoid premature conclusions. Artisan Strategies+1

UX, Design & Copy Considerations

Often the biggest wins in conversion optimization with A/B testing come from tweaking copy (headline, CTA wording), design (button color, layout), speed (page load), and form usability. Quantum Metric+2hub.conversionmentors.com+2

Qualitative Insights Alongside Quantitative Data

Tools like heatmaps, user recordings, surveys help understand why people behave in certain ways, not just what they do. These insights help generate test ideas. Quantum Metric+1

Best Practices & Common Pitfalls in Conversion Optimization with A/B Testing

Best Practices

- Start simple, prioritize high-impact elements: Work on elements that likely move the needle (CTAs, headlines, pricing). Website+1

- Run tests long enough: Ensure variation has enough exposure. Waiting for early “wins” may lead to false positives. Artisan Strategies

- Segment your audience: Compare performance across segments (device type, geography, new vs returning). hub.conversionmentors.com+1

- Maintain consistency in branding and messaging: Variation should respect your brand guidelines so trust isn’t lost. goodriot.co+1

- Use control groups and credible statistical methods: To avoid misleading conclusions. air360.io+1

- Document everything & maintain test tracking: Keep a log of what you tested, hypothesis, results, date, duration. This helps future tests.

Common Pitfalls

- Ending tests too early (before reaching significance)

- Testing too many variables at once without proper design

- Not accounting for external factors (seasonality, marketing campaigns) that may bias results Artisan Strategies+1

- Low traffic sites: insufficient sample may yield noisy or inconclusive data

- Focusing only on micro-conversions vs ignoring macro conversions (e.g. number of sales over add-to-cart clicks)

- Ignoring user experience: sometimes a variant that converts more may frustrate users in other ways

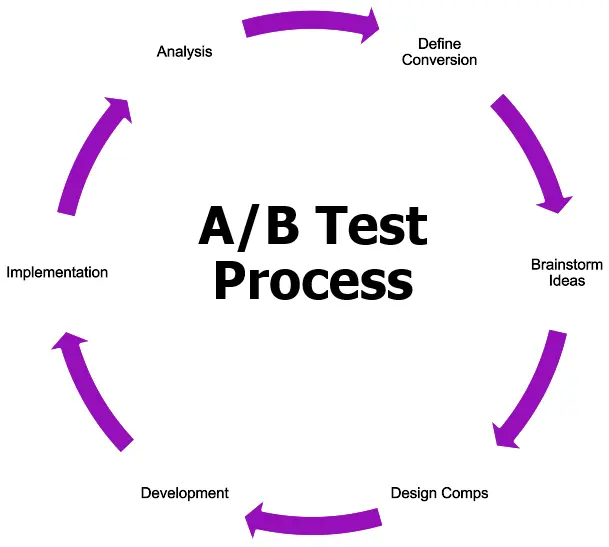

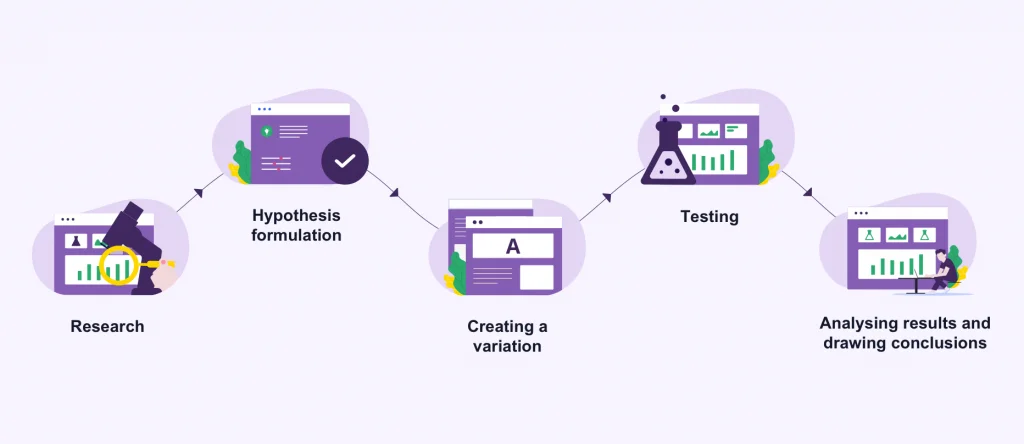

Step-by-Step Process: How to Execute Conversion Optimization with A/B Testing

Here’s a practical roadmap to follow:

Step 1 – Research & Identify Problem Areas

- Use analytics tools (Google Analytics, Hotjar, etc.) to find where users drop off or bounce

- Use heatmaps, session recordings to see behaviour

- Collect feedback, surveys to know user concerns

Step 2 – Form Hypotheses

- Based on data, propose what change might improve conversion

- Example: If many abandon at checkout page, hypothesis could be “Shortening checkout form from 5 fields to 3 fields will increase completion rate by 15%”

Step 3 – Prioritize Tests

- Assess each hypothesis for potential impact, ease of implementation, risk

- Use prioritization frameworks like ICE (Impact, Confidence, Ease)

Step 4 – Design Variations

- Create variant(s) for testing (for example CTA color change, new headline, alternative layout)

- Ensure the variation is distinct enough to produce measurable difference

Step 5 – Setup A/B Test

- Choose A/B testing tool

- Randomize and split traffic evenly across control vs variant

- Make sure tracking and analytics are working properly

Step 6 – Run the Test & Monitor

- Let test run for predetermined time or until sufficient sample size is reached

- Monitor for anomalies; ensure external factors (traffic spikes, external campaigns) are noted

Step 7 – Analyze Results

- Check primary metric (conversion) and supporting metrics for unintended effects (bounce, session length, etc.)

- Confirm statistical significance

- See performance by segments

Step 8 – Implement Winner & Learn

- If variant wins, roll it out broadly

- If no clear winner, analyze why: maybe the change was too small, or sample too small, or the hypothesis flawed

- Document what worked & what didn’t

Step 9 – Iterate & Scale

- Use learnings to generate new test ideas

- Build a continuous experimentation culture

- Apply successful changes also to similar pages or user flows

Tools & Platforms for Conversion Optimization with A/B Testing

Here are some popular tools that help you implement conversion optimization with A/B testing efficiently:

- Google Optimize (note: now discontinued in many places, so consider alternatives)

- VWO (Visual Website Optimizer) — supports A/B, split, multivariate tests Website

- Optimizely — enterprise level, advanced targeting, personalization

- Adobe Target — robust for larger organizations

- Unbounce — good for landing pages & simpler tests

- Hotjar / FullStory — for understanding qualitative behaviour alongside test data

- AB-testing modules in CMS / website builders, Shopify etc.

When selecting tools, consider:

- Traffic volume you have

- Types of tests (multivariate? split URL? layout? content?)

- Budget & pricing

- Ease of integration & reporting

Real-World Examples of Conversion Optimization with A/B Testing

Here are some case studies to illustrate how this works in real life:

- Transavia used Google Analytics & Google Optimize to test mobile homepage design. Result: 77% drop in bounce rate on mobile, ~5% increase in conversion. services.google.com

- Many e-commerce brands test CTA placements, button wording, checkout form length, product page layout. Small changes often produce measurable uplifts. Quantum Metric+2SDB Agency+2

These show that even modest improvements, when tested and implemented, accumulate into significant gains.

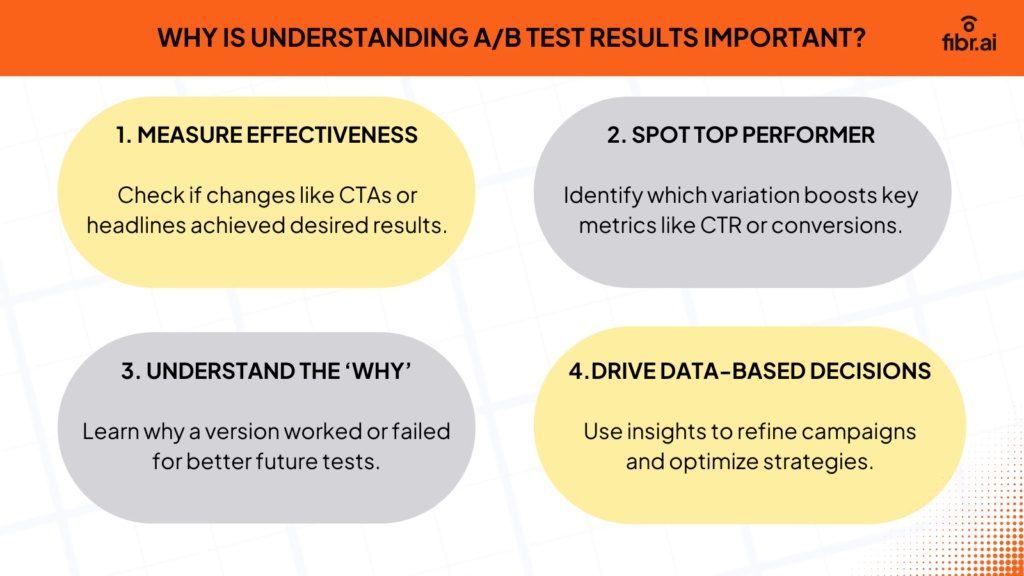

How to Interpret Results Properly in Conversion Optimization with A/B Testing

Statistical Significance vs Practical Significance

A result may be statistically significant but may not yield enough business value. For example, a 1% increase in conversion might be statistically valid but may not cover the cost of implementing change.

Look for Negative Side-Effects

Sometimes a variant might increase conversions for one metric but worsen others (e.g. increased bounce on other pages, decreased average order value). Monitor multiple KPIs.

Segment Analysis

Break down results by device, location, referral source. A variant may perform well on desktop but poorly on mobile.

Confidence Intervals & Sample Size Checking

Use confidence intervals to understand uncertainty; verify sample sizes are sufficient; beware overfitting to noise.

Duration & Time Effects

Tests spanning weekends, holidays, or special marketing campaigns may be skewed. Running longer helps mitigate seasonality effects.

Common Misconceptions about Conversion Optimization with A/B Testing

- Myth: Big changes always yield best results — Sometimes small tweaks are more reliable and less risky.

- Myth: A/B testing gives instant answers — It often takes time, patience, and repeated tests.

- Myth: All traffic is equal — Differences in segments matter a lot.

- Myth: Higher conversion rate always means better — Need to balance with margins, lifetime value, user satisfaction.

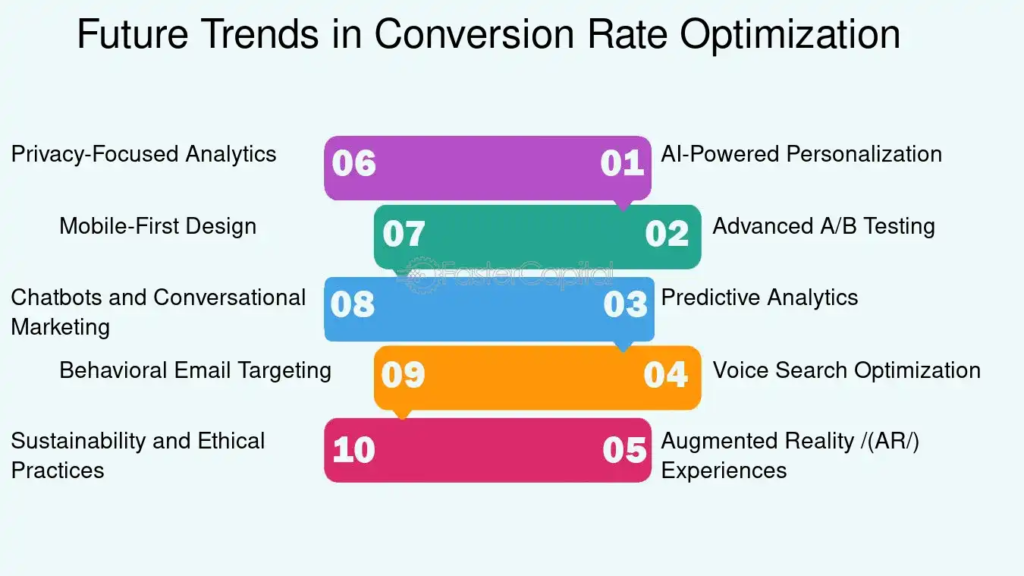

Future Trends in Conversion Optimization with A/B Testing

- Greater use of multi-armed bandit approaches (testing many variants and shifting traffic gradually)

- Increased personalization + dynamic content (variations customized per user)

- More AI / machine-learning to suggest variants & predict outcomes

- Hybrid experimentation: combining A/B testing with qualitative research and UX testing

- Real-time and sequential testing with always-valid statistics (so you don’t need to pick fixed durations)

FAQ: Conversion Optimization with A/B Testing

Here are frequently asked questions to clear doubts.

Q1. How long should an A/B test run?

A: Until enough data (sample size) is collected to reach statistical significance. Running too short risks false results. Also ensure test spans typical traffic cycles (weekdays, weekends, etc.).

Q2. How big should the sample size be?

A: Depends on current conversion rate, minimum detectable effect, confidence level. You can use online calculators to estimate sample size based on your baseline conversion rate and desired improvement.

Q3. Can I test multiple elements at once?

A: You can, but that becomes multivariate testing. It’s more complex and requires larger traffic. For most beginners, testing one element at a time is safer for clarity.

Q4. What if my site has low traffic?

A: For low-traffic sites, go for higher-impact changes, longer test durations, or focus on qualitative insights. Also consider pooling results or doing tested changes gradually. Sometimes A/B testing may be less viable but you can still do small-scale experiments.

Q5. What tools should I use?

A: Choose based on traffic, budget, ease of use. Tools like VWO, Optimizely, Unbounce, and others offer good interfaces. For simpler tests, built-in tools inside CMS or smaller solutions can suffice.

Q6. Will testing annoy users?

A: Usually not, if variants are reasonable and changes are not drastic. But avoid constantly changing user experience in disorienting ways. Also ensure performance (speed, UX) remains smooth.

Q7. How often should I run A/B tests?

A: Continuously, but not too rapidly. You should allow each test to finish properly. After one test is implemented, plan the next based on insights. Over time, many small improvements compound.

Summary & Action Plan for Conversion Optimization with A/B Testing

To wrap up:

- Conversion Optimization with A/B Testing is not a one-off but ongoing process

- Start with clear goals and hypotheses

- Test one variable at a time, ensure proper sampling, track multiple KPIs

- Use qualitative + quantitative data for insights

- Implement winning variations, iterate continuously

Action Plan:

- Audit your website or funnel to find pages with high drop-off or low conversion.

- Pick one change with high potential impact (e.g. CTA, form fields, layout).

- Define hypothesis, set up A/B test with a tool.

- Run test for sufficient duration.

- Analyze data, roll out winner, and document.

- Repeat with next hypothesis.

Your article helped me a lot, is there any more related content? Thanks!