Introduction to AI Ethics & Bias Detection

Discover how AI Ethics & Bias Detection are shaping the responsible future of artificial intelligence. Learn strategies, tools, and real-world solutions to build fair, transparent, and trustworthy AI systems in 2025.

Artificial Intelligence is revolutionizing the world — but as it grows, so do ethical concerns. AI Ethics & Bias Detection have become essential to ensure fairness, transparency, and trust in every algorithm that impacts human lives.

Whether it’s in hiring, finance, healthcare, or marketing, biased AI systems can lead to real-world harm. That’s why the global focus in 2025 is on creating ethical AI models that detect and correct bias before deployment.

2. What is AI Ethics?

AI Ethics is the framework that guides the responsible design, development, and use of AI systems. It ensures that AI respects human rights, fairness, accountability, and inclusivity.

Key ethical principles include:

- Fairness: AI should treat all individuals equally.

- Transparency: Algorithms must be explainable and auditable.

- Accountability: Developers and organizations should be responsible for AI outcomes.

- Privacy: AI must respect personal and sensitive data.

Why AI Ethics & Bias Detection Matter in 2025

As AI becomes more integrated into decision-making systems, AI Ethics & Bias Detection ensure that automation does not amplify discrimination.

For instance, an AI hiring system trained on biased data may favor one gender over another. In 2025, ethical AI practices are not optional — they are a legal and reputational necessity.

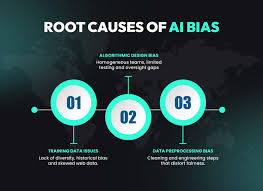

Understanding AI Bias: The Root of the Problem

AI bias often originates from data imbalance, algorithmic design, or human prejudice encoded into datasets.

Common sources of bias include:

- Historical data that reflects social inequalities

- Sampling errors in training datasets

- Lack of diversity in AI development teams

AI bias can lead to inaccurate predictions, unfair decisions, and loss of public trust.

Real-World Examples of AI Bias

- Facial Recognition: Studies found that facial recognition systems misidentify darker-skinned individuals at higher rates.

- Hiring Algorithms: Amazon’s past hiring AI favored male candidates due to biased historical data.

- Loan Approval Models: Financial AI models have sometimes discriminated based on race or ZIP codes.

Each example highlights the urgent need for AI Ethics & Bias Detection frameworks.

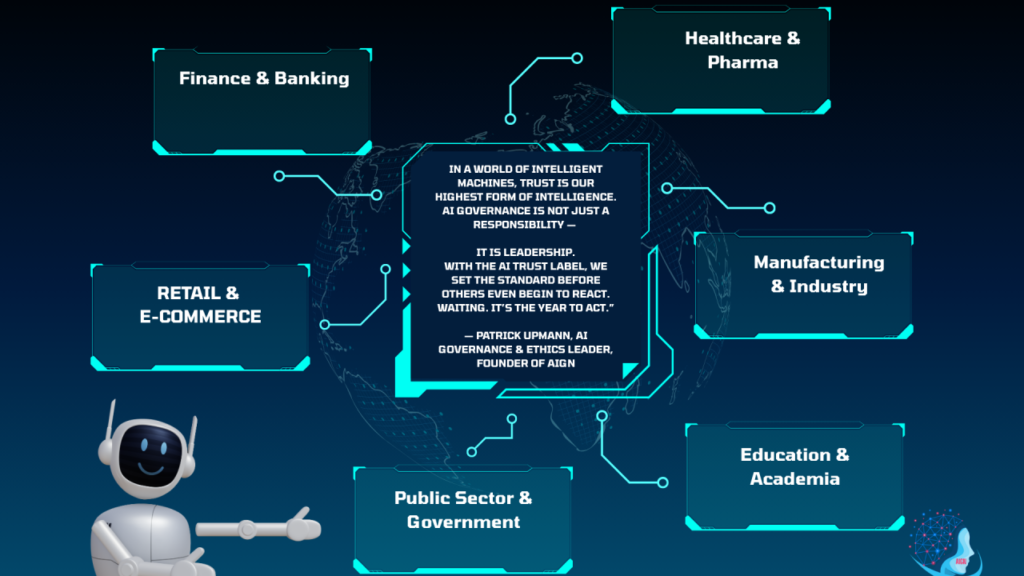

The Role of AI Ethics Committees and Governance

In 2025, many organizations have established AI Ethics Committees that oversee responsible deployment. These committees ensure compliance with local and international AI regulations.

Examples include:

- Google’s AI Principles emphasizing fairness and transparency

- EU AI Act focusing on risk-based AI regulation

These measures ensure that AI technologies align with societal values.

AI Ethics & Bias Detection in Machine Learning Models

Machine learning models rely heavily on data — which can be flawed. That’s why AI Ethics & Bias Detection are implemented during data collection, model training, and testing phases.

Modern frameworks like TensorFlow Fairness Indicators and IBM AI Fairness 360 help developers detect and mitigate bias efficiently.

Top Tools for AI Ethics & Bias Detection

Here are top-rated tools that businesses and developers use in 2025 for AI Ethics & Bias Detection:

- IBM AI Fairness 360

- Google What-If Tool

- Fairlearn (Microsoft)

- Aequitas by University of Chicago

- H2O.ai Responsible AI

Each tool provides visualization and fairness metrics to help developers maintain ethical AI standards.

Techniques to Identify and Remove AI Bias

- Data Auditing: Evaluate datasets for imbalance or prejudice.

- Re-sampling & Re-weighting: Adjust data distributions for fairness.

- Algorithmic Fairness Constraints: Embed fairness into model training.

- Post-processing Correction: Modify outcomes to reduce bias impact.

These techniques form the foundation of modern AI Ethics & Bias Detection processes.

Transparency and Explainable AI

Explainable AI (XAI) enables humans to understand how algorithms make decisions. It’s a core part of AI Ethics & Bias Detection, ensuring accountability and user trust.

Frameworks like LIME and SHAP are popular tools that make AI decisions interpretable.

Ethical AI Frameworks by Global Organizations

- OECD AI Principles

- UNESCO Recommendation on the Ethics of AI

- IEEE Ethically Aligned Design

These frameworks provide global guidelines for AI fairness, privacy, and accountability.

Read UNESCO AI Ethics Guidelines

How Businesses Can Implement AI Ethics Policies

Organizations can establish internal ethics frameworks by:

- Forming AI governance boards

- Conducting bias audits

- Publishing transparency reports

- Offering ethics training for AI developers

These steps ensure responsible innovation.

AI in Business

For businesses adopting ethical AI practices, check out our related guide on

👉 AI in Business – Responsible Implementation of AI

Regulations and Future Standards of AI Ethics

Governments worldwide are setting strict standards for AI Ethics & Bias Detection.

The EU AI Act (2025) and India’s AI Governance Framework aim to prevent misuse of AI in critical areas like security and finance.

AI Ethics & Bias Detection in Generative AI

With tools like ChatGPT, Midjourney, and Gemini, generative AI introduces new ethical questions — including data privacy, misinformation, and content bias.

AI Ethics & Bias Detection help regulate how these systems handle sensitive or biased data during text or image generation.

AI and Human Rights: The Moral Responsibility

AI must never compromise human dignity, privacy, or equality.

That’s why global ethics boards ensure AI aligns with human rights principles under frameworks like the UN’s Sustainable Development Goals (SDGs).

The Future of Ethical AI: 2025 and Beyond

By 2030, we expect AI systems to self-monitor for fairness using embedded ethical algorithms.

The future of AI Ethics & Bias Detection lies in automation — AI that can detect, learn, and correct its biases in real-time.

Conclusion: Toward Fairer, Smarter AI

As we move forward, AI Ethics & Bias Detection will be central to trustworthy innovation.

Developers, businesses, and regulators must work together to ensure technology serves everyone — equally and ethically.

FAQs

1. What is AI Ethics & Bias Detection?

It’s the process of ensuring AI systems act fairly and do not discriminate against any group or individual.

2. Why is AI Ethics important in 2025?

Because AI influences major life decisions — from jobs to healthcare — and must be unbiased to remain trustworthy.

3. Which tools are best for AI Bias Detection?

IBM AI Fairness 360, Fairlearn, and Google’s What-If Tool are leading solutions.

4. How can companies ensure ethical AI?

By forming ethics committees, conducting regular audits, and adhering to global standards.

5. What’s the future of AI Ethics?

Self-regulating AI systems with real-time bias monitoring and transparency built-in.

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.